🤖 AIBytes

Researchers built DT-GPT, a large language model trained with real patient data.

The model predict what a patient’s labs and health changes might look like in the future.

🔬 Methods

They tested the model with 3 Datasets:

Lung cancer: 16,496 patients. Predicted 13-week of blood test results.

Intensive Care Unit: 35,131 patients. Predicted the next 24 hours of respiratory rate, oxygen saturation, and magnesium.

Alzheimer’s: 1,140 patients. Predicted 24-month of test scores.

LLM: BioMistral-7B, fine-tuned with Electronic Health Record data.

📊 Results

Accuracy significantly improved:

Lung cancer: 3.4% better

ICU: 1.3% better

Alzheimer’s disease: 1.8% better

The model understood the typical connections between lab results.

Strong at identifying mild anemia.

Strong at identifying elevated LDH.

Weak at detecting rare, severe anemia.

Predicted dozens of lab values it was never trained on.

Created multiple possible futures for each patient and combined them into one final prediction.

Stayed stable even when large parts of the chart were missing or had misspellings.

🔑 Key Takeaways

LLMs can act as early digital twins, offering accurate forecasts.

Worked well with messy and incomplete Electronic Health Record data.

The model understands patterns between different lab tests.

Rare/severe events are hard to predict.

LLMs can create “digital twins” of patients that predict how their health will change.

🔗 Makarov N, Bordukova M, Quengdaeng P, et al. Large language models forecast patient health trajectories enabling digital twins. NPJ Digital Medicine. 2025;8:588. doi:10.1038/s41746-025-02004-3.

Researchers tested how well ChatGPT can rewrite scientific abstracts in simple language for clinicians and the public.

🔬 Methods

Dataset: 30 scientific abstracts from different medical specialties.

Intervention: ChatGPT rewrote each abstract twice:

one version for clinicians

one for the general public

Who evaluated the rewrites:

Two clinicians reviewed the clinician-focused versions.

Two non-clinical readers reviewed the public-focused versions.

All reviewers used a structured scoring rubric to rate accuracy, clarity, and usefulness.

Reviewers also identified types of errors (missing details, distortions, hallucinations).

Outcomes:

Readability

Perceived accuracy

Clarity and usefulness

Error type (omissions, distortions, hallucinations)

📊 Results

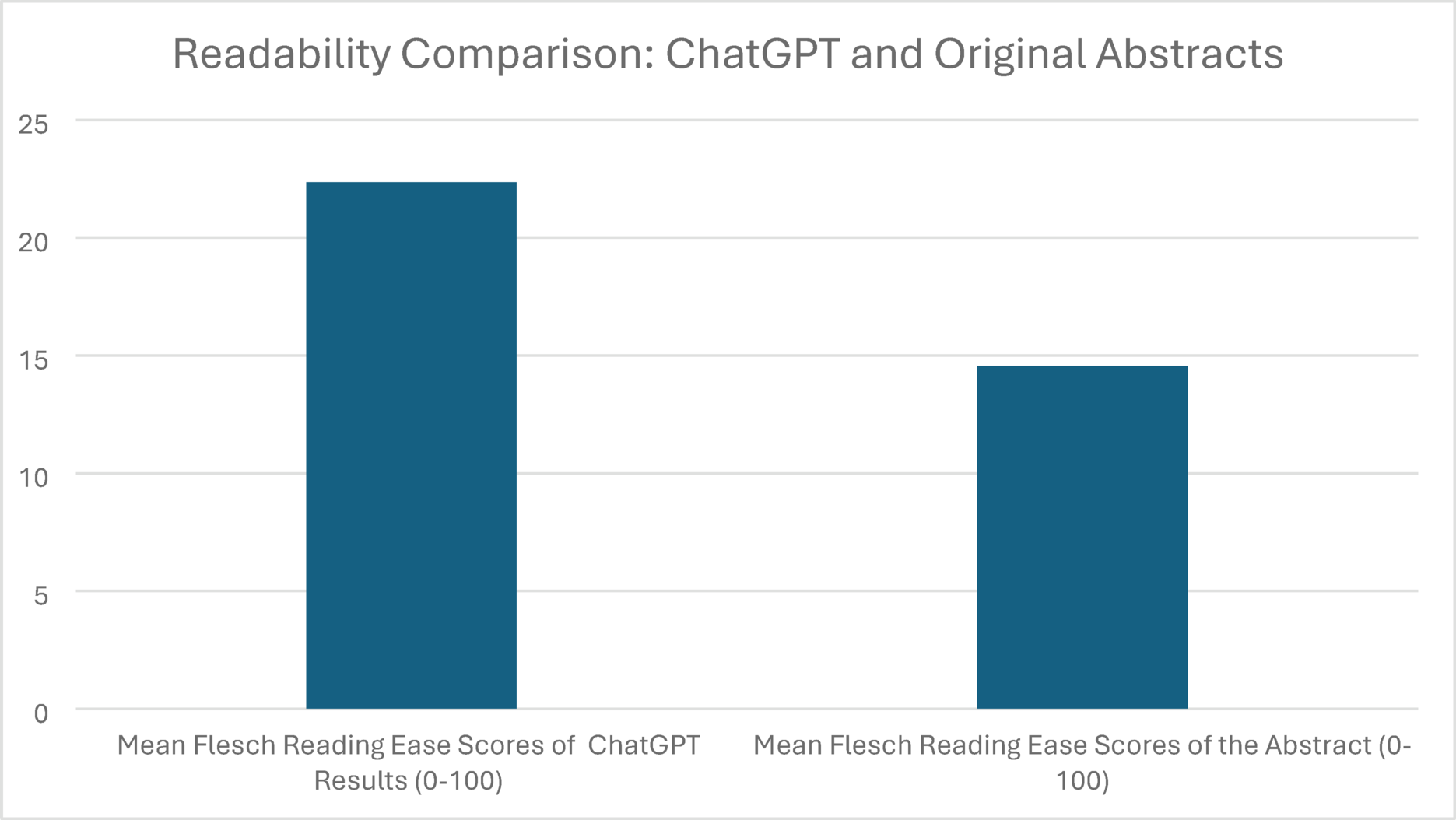

Both clinician and public versions had better readability scores than the original abstracts.

The simplified versions were easier to follow and more helpful than the originals.

Public versions had less technical language. Clinician versions kept more of the study methodology.

There detected occasional omissions and over-simplification. Hallucinations were rare.

🔑 Key Takeaways

ChatGPT can simplify scientific abstracts accurately while keeping the main message intact.

Public versions focus on improve readability.

Clinician versions keep more methods and results, making them useful for quick reviews.

Human oversight is still needed to catch omissions and ensure clinical precision.

🔗 Dogru-Huzmeli E, Moore-Vasram S, Phadke C, Shafiee E, Amanullah S. Evaluating ChatGPT’s ability to simplify scientific abstracts for clinicians and the public. Scientific Reports. 2025;15:33466. https://doi.org/10.1038/s41598-025-11086-8

🦾 TechTools

Elicit — Faster literature scans (Link)

AI research assistant that finds papers, pulls key data, and suggests related studies.

Great for getting a quick idea of a research topic.

Type a question like “AI for predicting stroke recovery” and skim the results.

Readwise Reader — One inbox for all your reading (Link)

Saves articles, PDFs, newsletters, and threads in one place. Offers AI summaries.

Keeps all your content organized so you can come back when you have time.

Summarizes the information.

Luma AI — Realistic 3D scenes (Link)

Turns your photos or short clips into clean, realistic 3D scenes.

Generates images directly from a text prompt.

Upload a short video, choose a capture mode, and let the app generate a 3D scene.

Prompting- Zero-Shot Chain-of-Thought

A simple way to improve an LLM’s reasoning by adding a single cue like:

“Let’s think step by step.”

When to use it

When you want the model to explain its reasoning, and every step.

To save time when you can’t prepare examples.

Prompt

“You are a PM&R physician.

Question: For a patient 4 weeks post-stroke with shoulder pain, what diagnostic steps should be considered first?

Let’s think step by step.”

Remember to always verify with original sources and your clinical judgment.

🧬AIMedily Snaps

As always, thank you for taking the time to read.

You’re already ahead of the curve in medical AI— don’t keep it to yourself. Forward AIMedily to a colleague who’d appreciate the insights.

You can either:

💌Forward this email

📲Share this link on your phone.

Thank you for sharing!

Itzel Fer, MD PM&R

Join my Newsletter 👉 AIMedily.com

Forwarded this email? Sign up here

PS. If you have 1-2 minutes, could you help me answer a few quick questions? 👉Survey. Thank you! 😊