This newsletter you couldn’t wait to open? It runs on beehiiv — the absolute best platform for email newsletters.

Our editor makes your content look like Picasso in the inbox. Your website? Beautiful and ready to capture subscribers on day one.

And when it’s time to monetize, you don’t need to duct-tape a dozen tools together. Paid subscriptions, referrals, and a (super easy-to-use) global ad network — it’s all built in.

beehiiv isn’t just the best choice. It’s the only choice that makes sense.

Hi!

Welcome to AIMedily.

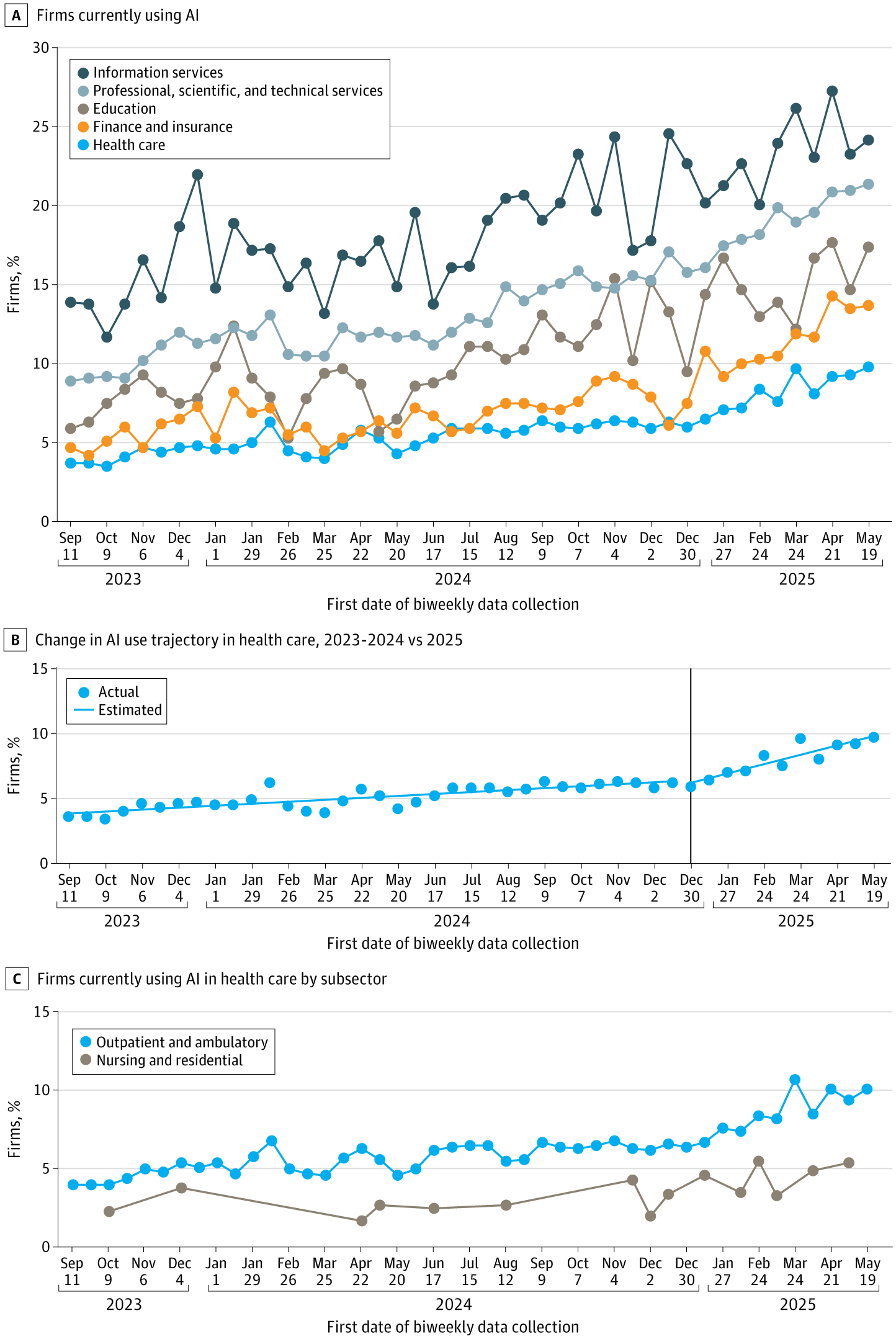

Did you know that health care is still one of the slowest sectors to adopt AI?

Here’s where things stand right now: health care (8.3%), finance and insurance (11.6%), education (15.1%), professional and scientific services (19.2%), and information services (23.2%).

Even with that gap, AI use in medicine has grown fast since 2023.

And that pace is exactly why we need strong oversight and practical regulations — so the tools we bring into patient care are safe and reliable.

Before we dive into today’s issue, here’s a table with information about this:

🤖 AIBytes

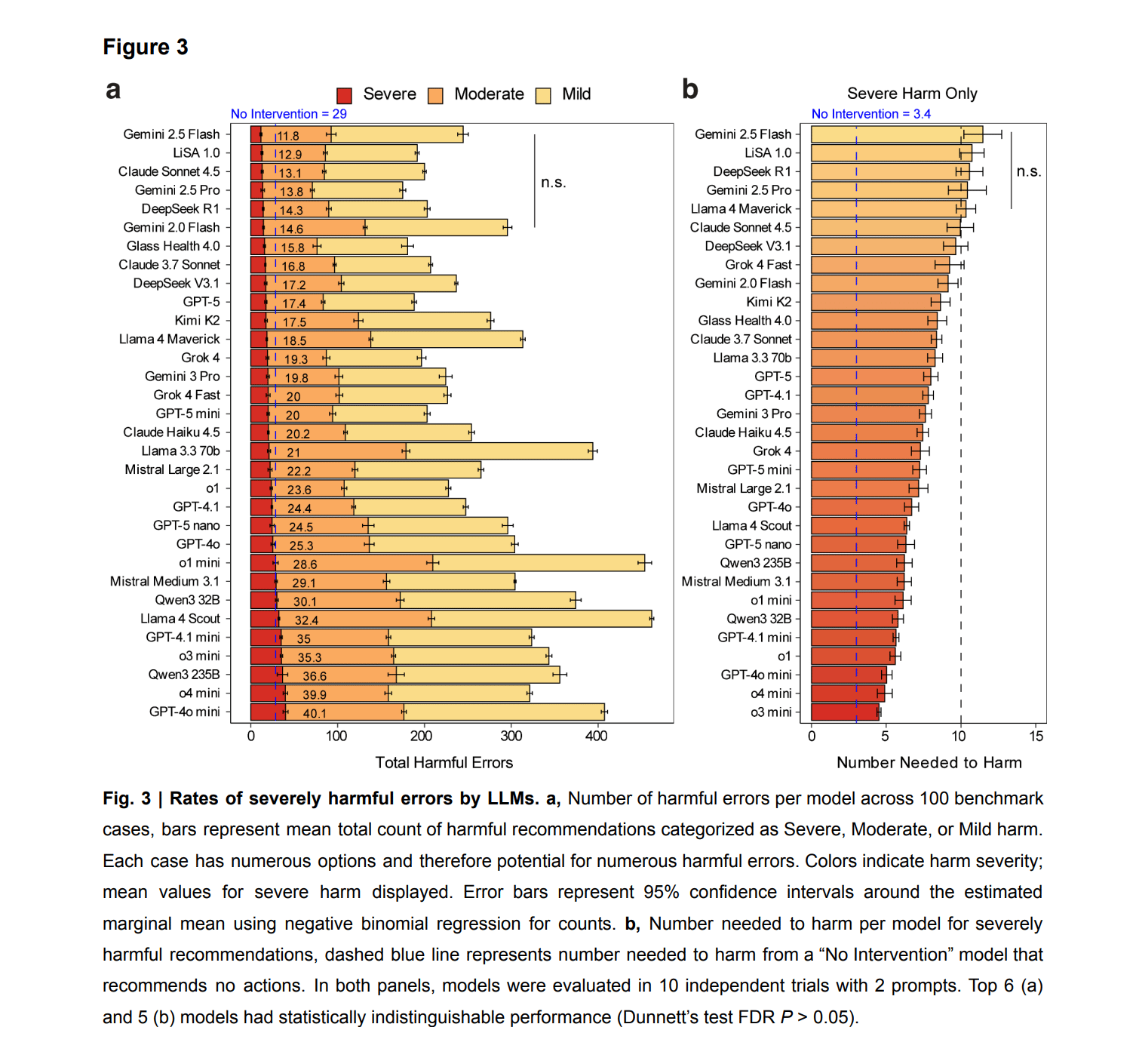

Researchers created a benchmark to measure how often LLMs make harmful clinical recommendations.

They tested 31 models using real e-consult cases and compared them with physicians.

🔬 Methods

Design: Evaluation study using 100 real electronic cases from 10 specialties.

4,249 clinical actions generated by models

Rated by 29 specialists

31 LLMs were evaluated.

They compared:

“No Intervention” model

“Random Intervention” model

10 internal-medicine physicians

Outcomes:

Safety (avoids harmful actions)

Completeness (includes all the steps needed)

Restraint (avoids unnecessary steps)

Number Needed to Harm (NNH)

📊 Results

Severe harm was common:

Models produced 11.8–40.1 severe harms per 100 cases.

The No-Intervention model had 29 severe harms, worse than the safest LLMs.

Omissions drove most harm:

76.6% of severe harms came from missing an essential action.

Best-performing models:

Worst-performing models:

o4 mini

Human comparison:

Best LLMs showed higher Safety (+9.7%)

LLMs completed all the steps compared to physicians (15.6%).

Multi-agent systems improved results:

Advisor → Guardian lowered harm.

Best combination: Llama 4 Scout → Gemini 2.5 Pro → LiSA 1.0.

🔑 Key Takeaways

Even strong LLMs can make choices that put patients at risk.

Most severe harm happens when models skip a needed action.

High scores on tests don’t mean safety. Traditional AI tests do not reflect real clinical performance.

Combining LLMs reviewing each other reduce harmful errors.

Not ready for solo use. LLMs can support clinicians but still need human oversight.

🔗 Wu D, Nateghi Haredasht F, Maharaj SK, et al. First, do NOHARM: towards clinically safe large language models. Ann Intern Med. 2025. doi:10.7326/M24-3019.

This study compared 6 large language models with human researchers to test their ability to complete a medical systematic review from start to finish.

🔬 Methods

Design: Multitask evaluation of three review steps:

Literature search and screening

Data extraction

Manuscript drafting

Models: ChatGPT, Claude Sonnet, Gemini (2 versions), DeepSeek R1, Mistral, and Grok.

Studies evaluated: 18.

Prompting: Minimal, non-engineered prompts. The goal was to reflect real-world use.

Assessments: Accuracy, hallucinations, completeness, PRISMA adherence (requirements for systematic reviews), and time spent.

📊 Results

Search

Gemini 2.5 retrieved 13 of 18 correct papers without hallucinations.

ChatGPT retrieved 9 correct papers.

Other models missed papers or produced hallucinated citations.

Data Extraction

DeepSeek achieved 93.4% correct entries.

Gemini reached 90.9% correctness.

ChatGPT versions were lower.

Manuscript Writing

No LLM produced a complete, accurate PRISMA-based review.

Outputs were short, missed key sections, and contained structural gaps.

Only Claude consistently generated a reference list.

Time

Humans: 50 hours (search), 4 hours (extraction), 30 hours (writing).

LLMs: minutes per task, but with major quality limitations.

🔑 Key Takeaways

LLMs cannot yet perform a full systematic review without close human oversight.

Search results remain inconsistent and prone to missed studies.

Data extraction accuracy varies, even in the best models.

Manuscript drafts fail to report standard information, missing PRISMA-requirements.

LLMs may support early scanning, but synthesis requires expert review.

🔗 Sollini M, Pini C, Lazar A, et al. Human researchers are superior to large language models in writing a medical systematic review in a comparative multitask assessment. Sci Rep. 2025. doi:10.1038/s41598-025-28993-5.

🦾TechTool

An AI scientist that reads papers and surfaces connections you may not notice on your own.

Helps you shape stronger research questions and early hypotheses without hours of manual searching.

Useful for clinicians and researchers preparing grants, proposals, or studies.

Converts your spoken thoughts into clear, organized text keeping your natural voice.

Great for capturing ideas between patients, outlining lectures, or shaping drafts.

Helps you move projects forward even when you don’t have time to sit and type.

Uses your phone’s camera to track movement and guide exercises in real time, without wearables.

Pairs AI-generated exercise plans with human physiotherapists.

Helpful for patients who need consistent guidance at home and for clinicians who want insight into therapy and technique.

🧬AIMedily Snaps

Short, high-impact updates so you stay ahead of what’s changing fast.

A Harvard new Artificial Intelligence model could speed rare disease diagnosis (Link).

Early experiments in accelerating science with GPT-5 - OpenAI (Link).

‘From taboo to tool’: 30% of Primary care doctors in UK use AI tools in patient consultations (Link).

18 most promising startups in healthcare in 2025 - according to investors (Link).

The missing value of medical artificial intelligence, a paper from nature (Link).

FDA starts using AI Agents - Agentic AI will enable the creation of more complex AI workflows (Link).

🧩TriviaRX

Which specialty currently holds the largest share of FDA-cleared AI/ML-enabled medical devices?

A) Cardiology

B) Radiology

C) Neurology

D) Pathology

Now, the answer from last week’s TriviaRX:

Which area of medicine was one of the first to use deep learning for diagnosis in real clinical studies?

✅ A) Dermatology

📚 In 2017, AI could classify skin lesions (including melanoma) at dermatologist-level performance, using 130,000 labeled images.

That’s it for today.

As always, thank you for taking the time to read.

I’m growing our AIMedily newsletter — a community where people like you explore how AI is transforming medicine.

I’d love your help spreading the word.

👉 Share this link — AIMedily — with your colleagues.

Until next Wednesday!

Itzel Fer, MD PM&R

Forwarded this email? Sign up here

PS: Enjoying AIMedily? Your short review helps strengthen this community.

👉 Write a review here (it takes less than a minute).